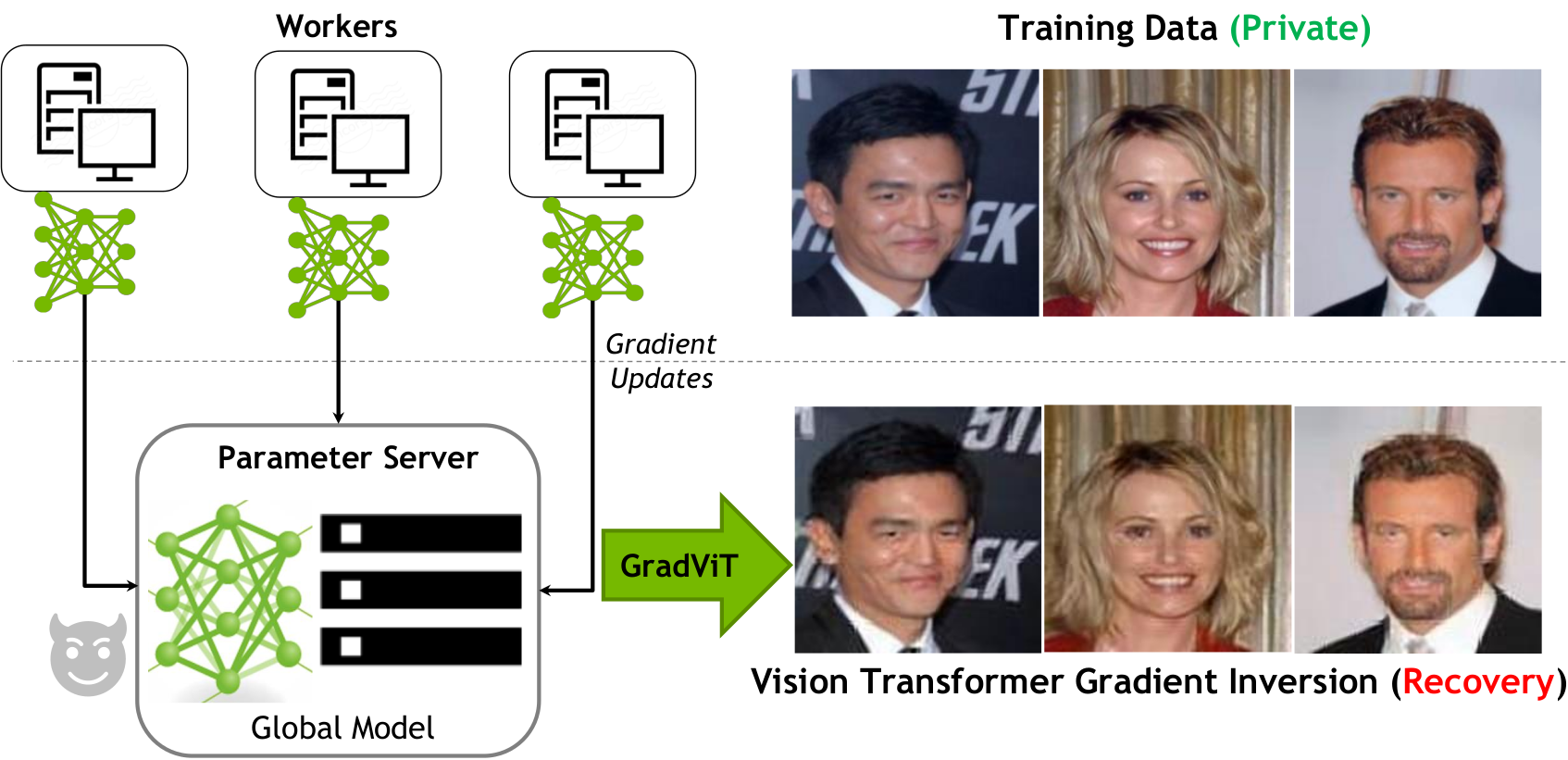

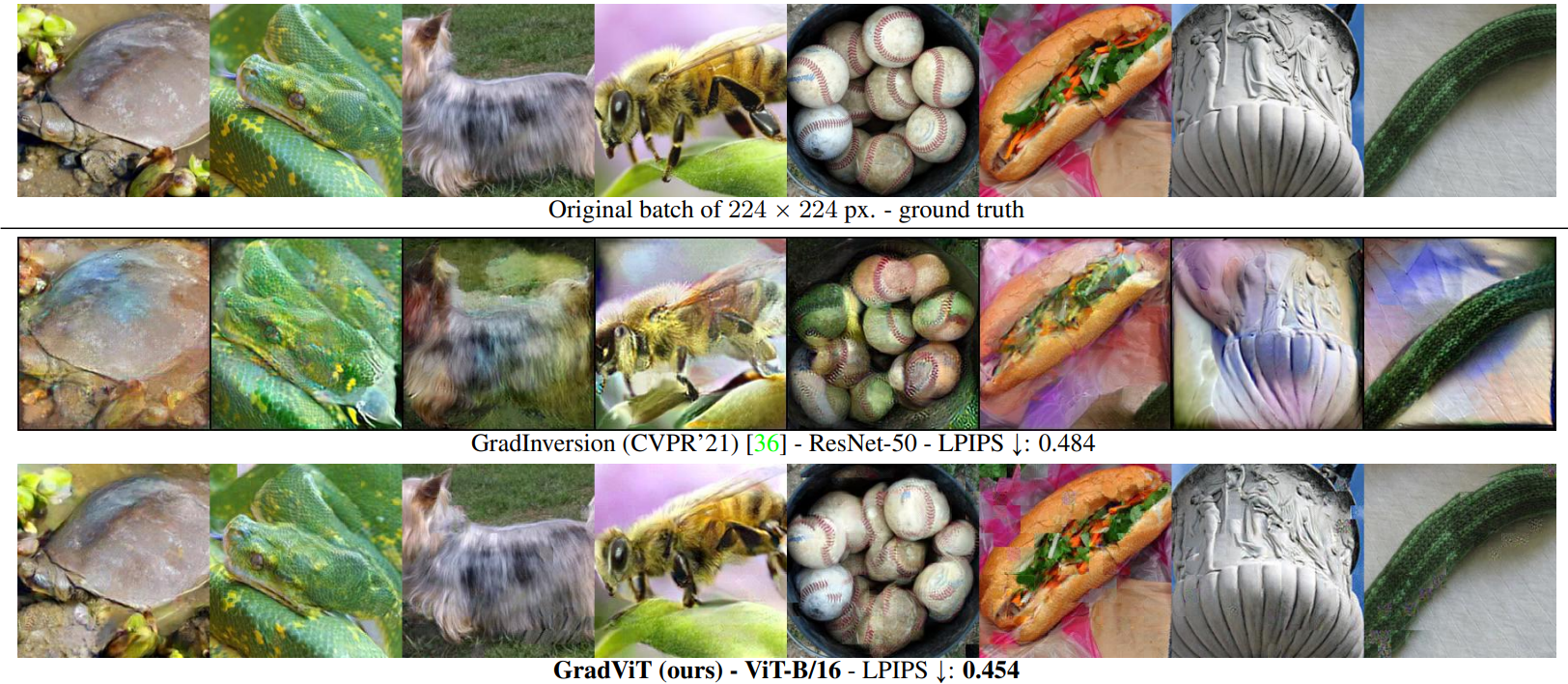

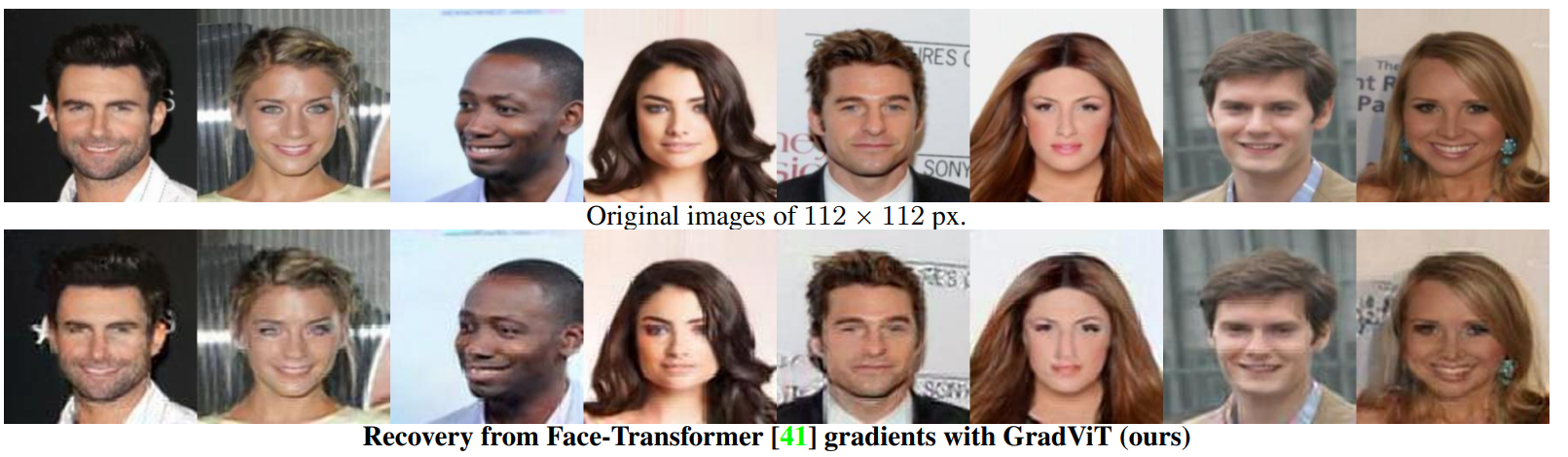

In this work we demonstrate the vulnerability of vision transformers (ViTs) to gradient-based inversion attacks. During this attack, the original data batch is reconstructed given model weights and the corresponding gradients. We introduce a method, named GradViT, that optimizes random noise into naturally looking images via an iterative process. The optimization objective consists of (i) a loss on matching the gradients, (ii) image prior in the form of distance to batch-normalization statistics of a pretrained CNN model, and (iii) a total variation regularization on patches to guide correct recovery locations. We propose a unique loss scheduling function to overcome local minima during optimization. We evaluate GadViT on ImageNet1K and MS-Celeb-1M datasets, and observe unprecedentedly high fidelity and closeness to the original (hidden) data. During the analysis we find that vision transformers are significantly more vulnerable than previously studied CNNs due to the presence of the attention mechanism. Our method demonstrates new state-of-the-art results for gradient inversion in both qualitative and quantitative metrics.

We show vision transformer gradients encode a surprising amount of information such that high-fidelity original image batches of high resolution can be recovered, see 112 × 112 pixel MS-Celeb-1M and 224 × 224 pixel ImageNet1K sample recovery above and more in experiments. Our method, GradViT, yields the first successful attempt to invert ViT gradients, not achievable by previous state-of-the-art methods. We demonstrate that ViTs, despite lacking batchnorm layers, suffer even more data leakage compared to CNNs. As insights we show that ViT gradients (i) encode uneven original information across layers, and (ii) attention is all that reveals.

GradViT: Gradient Inversion of Vision Transformers, CVPR 2022.

paper

@article{hatamizadeh2022gradvit,

title = {GradViT: Gradient Inversion of Vision Transformers},

author = {Hatamizadeh, Ali and Yin, Hongxu and Roth, Holger and Li, Wenqi and Kautz, Jan and Xu, Daguang and Molchanov, Pavlo},

journal = {arXiv preprint arXiv:2203.11894},

year = {2022},

}